Load Testing using ab Apache Benchmark command-line tool

ab is a simple load testing command from Apache. It benchmarks your HTTP server by automating a scenario of sending multiple requests with concurrent clients.

Apache webserver provides a command-line tool - ab. It is available by default in your MacOS and Linux terminals given you have successfully installed Apache2. In Debian system, apache2-utils package contains this tool so you might need apt-get install apache2-utils to use it. In Windows, download the Apache Binaries, extract it, and run the executable ab.exe file from apache/bin/ folder.

ab is a simple load testing command from Apache. It benchmarks your HTTP server by automating a scenario of sending multiple requests with concurrent clients.

A command-line tool to send multiple automated requests to check the performance of your server. It basically determines the number of requests per second an HTTP server is capable of serving; tweakable by options.

Usage: ab [options] [http[s]://]hostname[:port]/path

[options] are:

-n requests Number of requests to perform-c concurrency Number of multiple requests to make at a time. Range 0 to 20000-t timelimit Seconds to max. to spend on benchmarking. This implies -n 50000-s timeout Seconds to max. wait for each response. Default is 30 seconds-p postfile File containing data to POST. Remember also to set -T-u putfile File containing data to PUT. Remember also to set -T-v verbosity How much troubleshooting info to print-A attribute Add Basic WWW Authentication, the attributes are a colon separated username and password.-P attribute Add Basic Proxy Authentication, the attributes are a colon separated username and password.-X proxy:port Proxyserver and port number to use-k Use HTTP KeepAlive feature-e filename Output CSV file with percentages served-r Don't exit on socket receive errors.

For a full list of available options, $ ab -h or $ ab -help

Example

$ ab -n 100 -c 10 https://api.example.com/

This will simulate 100 connection requests (-n) over 10 concurrent threads (-c) to https://api.example.com/

Testing an example server API

We assume an example of load testing an instance of a test server of XYZ APIs hosted in the cloud.

$ ab -r -c 100 -n 500 -s 30 -k https://api.example.com/

Let us assume that this URL is an endpoint of a GET request for clients (mobile apps) to receive a certain response.

Here we're simulating an HTTP session of 500 clients trying to send 100 multiple requests concurrently at a time.

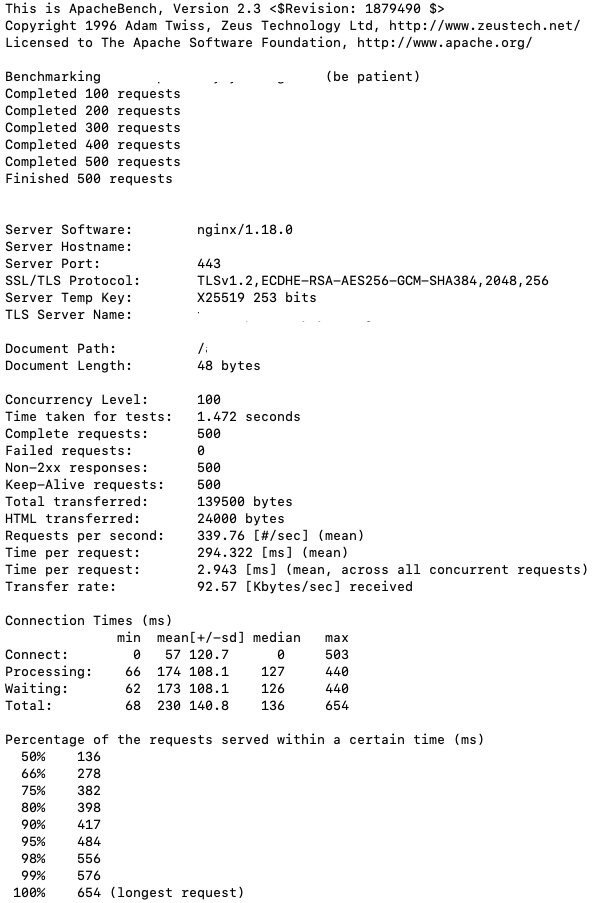

And here's a simulated result from the terminal.

Most of the output is pretty self-explanatory for a developer. Let's dive into some of the timing data.

The test took 1.472 seconds to complete. We set -n 500 so the total client requests were 500; out of them, none failed.

On average ~339 requests were received, processed and responded to by the server in a second. Requests per second is vital data. It denotes the number of requests served by the webserver in a second. The number of requests 500 divided by the total time taken 1.472 seconds = 339.7 mean. Higher this number is better.

The minimum time taken to serve a request was 68 ms; the fastest request served by the server. The maximum time taken to serve was 654 ms; the slowest request served by the server.

Keep-Alive header

When the client sends an HTTP request, a socket is connected to the server. The server acknowledges the client's request, sends the response and the connection is closed. This client-server architecture cycle continues with each request. If there's a second request then it would go through the same process but every time opening and closing the socket connection would be a burden once there are many requests concurrently. This burden may be known as connection initialization time. However, by setting the keep-alive HTTP header, the socket is opened once and not closed until all requests are completed. Here the two most vital parameters considered are the timeout and max number of requests.

For example, let us assume a scenario where 50 people are in queue for their payrolls outside a room that has a door and inside resides an HR who's ready to process their requests. Think of HR as a server, room as a socket connection, door as the header, payrolls as the requests and 50 people as clients. The general cycle is that each person opens the door, receives an assigned cheque and closes the door to complete their transaction process. This is a simple client-server request/ response cycle without an HTTP header -k. Now with the keep-alive option added, the first person to enter the room opens the door, receives the cheque but doesn't close the door when leaving. The door is left open for others in the queue. Others need not reopen the door, saving their time from doing it and the whole payroll system is much quicker now. However, the last person (50th) to enter the room would need to close the door right after leaving so that an unknown would not disguise as a 51st to reap away payrolls.

Testing POST requests

$ ab -n 100 -c 10 -p example.json -T application/json -rk https://api.example.com/

Important Notes

- Performing load testing with too many requests, setting high parameters, concurrency numbers is equivalent to simulating a DOS attack on a web server. Make sure you know where and why are you trying to load test. In general, load testing on an unknown server address could put your IP on their blacklist or moreover the server could block your origin IP thinking you as an attacker as part of their security protocols.

- Using

-cconcurrency option you can test possible race conditions and deadlock issues in async scenarios. - Always perform tests from another device not connected directly to the webserver. Benchmarking your localhost

127.0.0.1maybe your playground but it will not yield real results. Plus running concurrent threads on multiple URLs may give you skewed results.